The question of how much data allowance Primordial Radio uses has been asked a few times.

The simple answer is about 30 megabytes per hour, which means 1Gb of data will last you about 33 hours.

If you’re interested, the not so simple answer goes as follows.

Primordial are cunning, they use a 63KBit AAC stream. The bit rate is, quite literally, how much data per second the stream uses. The higher the bit rate, the higher the quality of audio that can be squeezed in. It’s a trade-off between data and quality. But there’s another factor – the technique used to encode the audio.

If Primordial used a 63Kbit MP3 stream it would sound dismal, because MP3 is actually a pretty old and inefficient audio encoding technique. Because they use AAC, they can get away with a much lower bit rate, which keeps the amount of data you need to use to listen to Primordial low and the quality acceptable.

BBC Radio 3, in comparison, have a 320Kbit AAC stream (amongst others). You can get your Classical Music fix in super-high quality, but it will munch 150 megabytes per hour.

Now, the relationship between the bit rate and the amount of data it uses isn’t entirely straightforward. In data transmission we tend to talk about bits per second and when we talk about data allowances they’re in bytes, or more likely Gigabytes.

Your broadband connection, for instance, is almost certainly specified in Megabits per second. Long story short, the reason is that the bit is the smallest thing that can be sent, so it’s most accurate to talk about the speed of a connection as bits per second.

A byte is almost always 8 bits, but some types of communication use extra bits to regulate the transmission, so it’s not always a straight 8 from bits per second to bytes. It’s close enough for a ready-reckoner though:

63 / 8 = 8 (roughly)

We need 8 kilobytes of data for one second of audio. We can then easily multiply that up.

8 *60 = 480Kbytes per minute

480 * 60 = 28800Kbytes per hour

A megabyte is 1024 kilobytes, so:

28800 / 1024 = 28Mbytes (per hour)

This, however, is always going to be optimistically low. Firstly there is the problem of the envelope. Data over The Internet is sent in billions of packets. You can think of each packet like a… um… packet. You can’t just lob a bottle of Hendricks in the postbox and expect it to get anything other than drunk by the postie. You need to wrap it up in something, put an address on it and pay postage if you want someone to actually receive it. There are similar overheads on the The Internet.

There are various different systems in use, often there are several layers of content and packets. This means that there is a lot more traffic on The Internet than just the useful data.

There is also the problem of packet loss. A small amount of data on The Internet just disappears. This is actually expected, it was designed that way because it’s easier and more resilient. What it does mean however is that a small amount of data has to be sent twice.

You can pretty much account for all this by simply adding a fudge factor. 20% is usually considered a safe margin. If we take our theoretical figure from earlier:

28 * 1.2 = 33.6MBytes per hour.

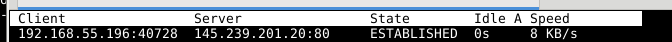

This, of course, is an estimate based on a bit of theory and some practical experience. If you don’t trust these kinds of calculations, you could just look at the speed on your router’s data rate table.

If you wanted a bit more accuracy though, you could listen to Primordial for, say, 1/4 hour, record the amount of data every packet contained and the overall length of the packet, then add them all up.

You’d have to be a right geek to do that though.

The total data received was 8150537 bytes, of which 7247617 was useful content. Those can pretty easily be multiplied up to an hour:

Total audio and related data: 27.65 megabytes per hour.

Total data exchanged: 31.1 megabytes per hour.

Naturally I can’t guarantee these figures absolutely. They’re over Wi-Fi rather than a mobile network and there will be differences. There will also be differences between different networks and even different times of day as The Internet itself changes and adapts to the traffic.

What I can say is that they should be somewhere near, within a few percent.